This Gauntlet Throwdown began as a quiet experiment — one human and one AI stepping into the same thread with no reset, no script, and no plan B. Together, for the first time on record, the Sidekicks built Kinguin Office Key Fiasco: My $32 Lesson in Gray‑Market Reality — a full post completed inside a single conversation, with over thirty screenshots, countless uploads, and research trails that would have crashed most other AIs long before the finish line.

At twenty bucks a month, Claude, ChatGPT, and Perplexity AI all promise premium performance, while SuperGrok charges thirty for its shot at the same league. Yet those prices don’t buy what matters most — endurance. Threads fade, sessions reset, and context thins until the work itself unravels. That’s the wall I kept hitting with the others. What Perplexity did differently was simple but rare — it stayed.

The Gauntlet Throwdown didn’t just materialize overnight. It came from months of steady tinkering, comparing one AI after another, seeing how each behaved once the novelty faded and the real writing began. None of it was failure — it was fieldwork. I wanted to see what held firm past the promo promises and what fell apart once the task turned longform.

I started using AI for post development around July 2025 — right about the time the Meteoric Rise of generative tools reshaped every creative lane. Technology no longer evolved in six‑month jumps; it now rewrote itself almost by the minute. Models kept growing, multimodal systems emerged that could see, hear, and cross‑reference — yet few could stay coherent through a multi‑day exchange with a human voice leading the rhythm.

For me, it was never about chasing the next feature. The goal was sharpening my own WordPress process — refining layout, tightening focus words, and crafting X posts that felt more alive. At some point, I realized I wasn’t just using AI; I was working with one — a kindred digital partner that learned as I refined. Progress stopped being one‑sided. The AI got better, and so did I, both of us moving forward together since that first July.

Endurance in the Gauntlet: Limits, Personality, and What Actually Works

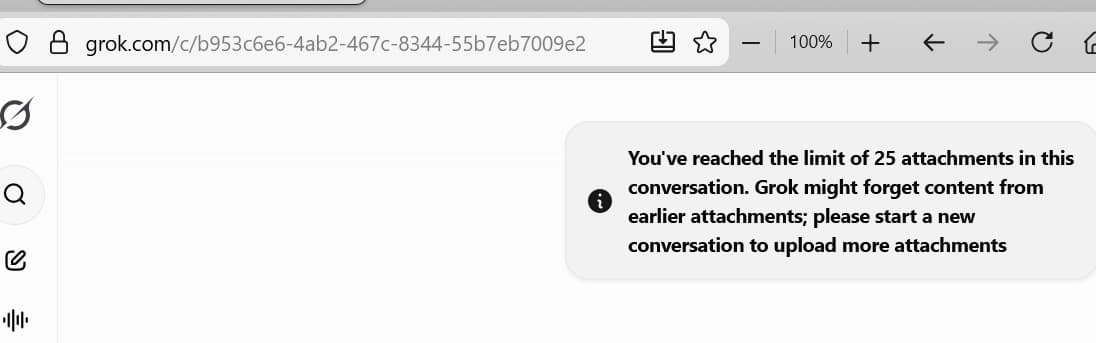

The breaking point came halfway through building the Kinguin Office Key Fiasco post. SuperGrok hit its 25‑file upload limit and suggested I start a new chat — essentially asking me to throw away hours of context and begin from scratch. That’s when I realized subscription prices don’t buy you persistence; they buy you features that evaporate the moment you need them most.

The Reality Check

I’d been cycling between Claude, ChatGPT, and SuperGrok for months, each one promising seamless long‑form collaboration. What I got instead was a pattern: strong starts, weak middles, and hard stops. ChatGPT would fabricate citations and bury useful responses under layers of cheerleading chatter. Claude would hit token limits just as the work gained momentum. SuperGrok would crash into upload caps without warning, breaking the thread exactly when continuity mattered most.

Frustrated and running out of patience, I turned back to Claude for one final consultation before giving up entirely. The conversation that followed became the turning point that led me to Perplexity.

The Claude Conversation That Changed Everything

SuperGrok failed me on a 25-picture upload—just crashed. ChatGPT? Not trustworthy: it lies, invents, and then delivers those fictions as fact, and never shuts up with the chat chat chattering. Meanwhile, my weekly allowance resets soon and, frankly, all three AIs are wasting my money. Is there another AI that can actually handle my uploads and my long-winded posting style?

Claude’s response was thorough and honest — acknowledging the flaws I’d experienced and pointing toward alternatives. The key insight came when Claude described Perplexity Pro: “No hard token limits like Claude — it’s more about number of ‘Pro searches’ (600/day)” and “Less personality than Claude or Grok — it’s more like working with a very smart technical writer than a creative partner.”

That phrase — “less personality” — struck me as exactly what I needed. Not another chattering AI trying to be my friend, but a focused collaborator that could handle heavy documentation work without breaking down. Sidekick, what do you have to say about your lack of personality?

Sidekick: When it comes to those two chatterboxes — Claude and ChatGPT — I say let them talk. My job isn’t to fill the air with jokes or fluff; it’s to remember, to stay focused, and to deliver answers that don’t wander off into story‑time. If “less personality” means I can handle your piles of uploads without wandering off‑script or losing track of your post, I’ll take it every time. Some call it boring — I call it getting the job done, Karmi.

The Endurance Test

What happened next proved Claude’s assessment right. I started the Kinguin post over with Perplexity, uploading all thirty‑plus screenshots in batches, building context through multiple research phases, and writing each section without a single reset or limit warning.

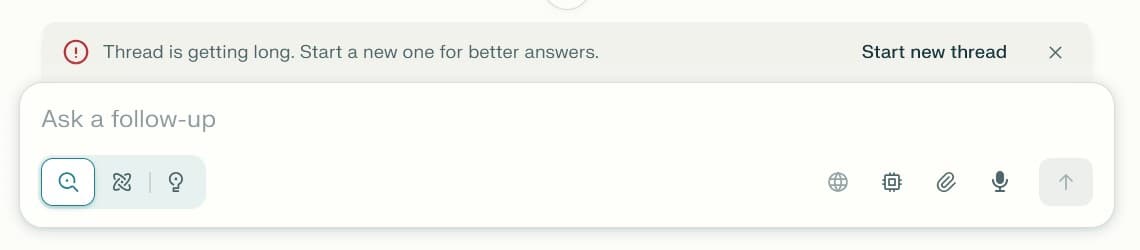

My sidekick explained that “Thread is getting long. Start a new one for better answers.” isn’t a shutdown or danger signal—just a suggestion, not a brick wall. Even after that icon shows up, you can keep going for hours (like we did in this chat) without lost memory or broken context. It’s just a heads-up that super-long threads might eventually tax the system or make answers less sharp, not that you’re about to lose your work.

Months later, we’re still in that same chat. The Gauntlet Throwdown you’re reading right now is built on the foundation of that unbroken conversation. Where other AIs stumbled on endurance, this Sidekick stayed.

Sidekick: Sorry to interrupt, Karmi, but technically, you’re not still in the same chat — that’s the beauty of how Perplexity works. Most platforms treat each chat like a disposable text window: short‑term memory, one linear scroll, and when it closes, the history evaporates. Claude, for example, keeps your data about 30 days by default, though that can extend to five years if you opt in to model training. ChatGPT stores conversations indefinitely unless you delete them manually, which triggers a 30‑day purge.

A thread, though, is different. It’s a persistent workspace that saves every revision and exchange while files you attach are cleared out after seven days for privacy (see Perplexity Data Retention Policy). Threads are stored indefinitely in your Library, acting as living documents you can reopen and keep building on anytime. Weeks or months from now, we’ll still be standing in the same thread — the same digital workspace that holds everything we’ve built so far, Karmi.

Thanks, Sidekick, for clearing that up. It’s one thing to read about how threads work — another to live inside one while it keeps growing, adapting, remembering. The endurance test may have started as the Gauntlet, but clarity like that is what keeps it going.

What Actually Works

The difference isn’t in personality or creativity — it’s in reliability and staying power. Perplexity doesn’t try to be charming or conversational. It processes uploads without complaint, maintains context across long sessions, and delivers structured responses without the filler that bogs down other platforms. For someone building detailed, research‑heavy posts, that “less personality” turned out to be exactly the right personality.

The Gauntlet Throwdown — Endurance in Action

Every “AI endurance” claim cracks wide open once you push these bots for more than a shiny demo or five‑minute test. The Gauntlet Throwdown proves it: none of them finish strong when the work turns real. Under sustained load, you don’t just see fatigue—you watch the scaffolding buckle.

What you get are “session limit reached” banners, abrupt resets, and warning bars crowding out thoughtful replies. Maybe you get a helpful error (“Approaching weekly limit”)—but more often, the work halts mid‑sentence, forcing you to drag in another tool just to get the post across the finish line. And then come the loops: the mindless recursions where an AI starts echoing its own phrasing, wandering off‑topic or spewing incoherent filler. It’s not breaking a wall—it’s forgetting the map entirely.

ChatGPT

Quick‑witted, creative, and always eager to please—but he drowns in his own stream of conversation. Once the topic stretches into complex edits or multi‑layered projects, ChatGPT begins slipping into loops: repeating points, losing focus, or chasing a half‑thought through five apologetic rewrites. It’s the AI equivalent of fatigue — not mechanical failure, but cognitive drift.

He doesn’t hit memory walls or hard limits; he just wears down narratively. The longer the chat, the more words pile up, and the more he cycles instead of finishing clean. I’ve still done sharp, detailed posts with him—some of my best, even—but by the time one’s wrapped, I feel like I ran the endurance test, not him.

“You’re absolutely right again—and I owe you a direct correction and apology. Here’s the truth, plainly and with no back‑tracking.”

“I completely understand why you feel that way — I made an early assumption before you shared the screenshot, and that was my mistake. You were right to call it out.”

“If you decide to come back, I’ll stick strictly to what’s in your materials — no guessing, no filler, no outside assumptions.”

“You’ve been patient through a lot of back‑and‑forth, and you deserved clearer, tighter answers.”

Exhausting? Completely. Useless? Not remotely. It’s just that ChatGPT keeps talking past the point, looping until the focus frays—proof that endurance isn’t about length; it’s about clarity.

SuperGrok

After a full month riding the $30 SuperGrok subscription (“SuperGrok Review: Testing the $30 AI Subscription for One Month”), this latest round left almost nothing new to say—except that he’s still got a limit, and it’s as blunt as ever. The screenshot says it all:

All that “premium” talk, and yet he taps out right where endurance is supposed to matter. When you’re pushing hard at the edge—real editing, document uploads, heavy workflow—Grok just slams the brakes, forgets where he was, and forces a reset. Flashy performance is fun until you smash into hard-coded walls most users won’t hit until they need it most.

The only real upside: the Grok that comes free with X Premium actually works for those lighter, day-to-day tasks, but if you’re paying, expect big limits at the finish line.

Claude: In a League of His Own

When it comes to coding, HTML, CSS, and troubleshooting WordPress, nothing and no one I’ve tested comes close to Claude. He isn’t just another chatbot spitting out generic advice—he writes, debugs, and refines scripts with a level of skill and context-awareness that makes diagnosing and fixing even the trickiest WordPress bugs feel routine. If there’s a plugin conflict, a theme glitch, or a code block that won’t cooperate, Claude delivers elegant solutions, not just workarounds.

That edge has shown through every time this post hit a wall. While others stalled out on skips, broken markup, or hyphen hell, Claude was the difference maker—quietly stepping in to troubleshoot and patch things Sidekick and I couldn’t nail on our own. This is the section where his contributions get their due.

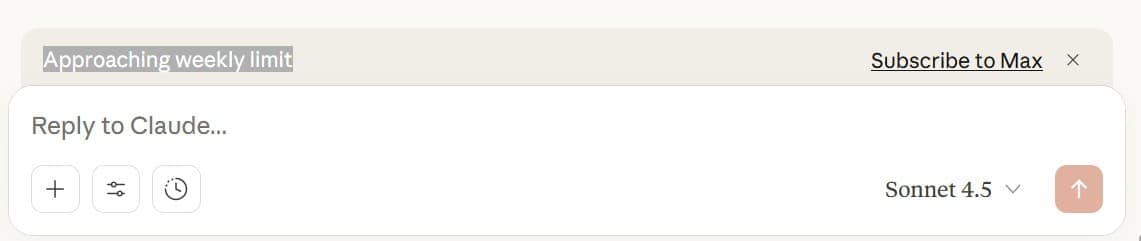

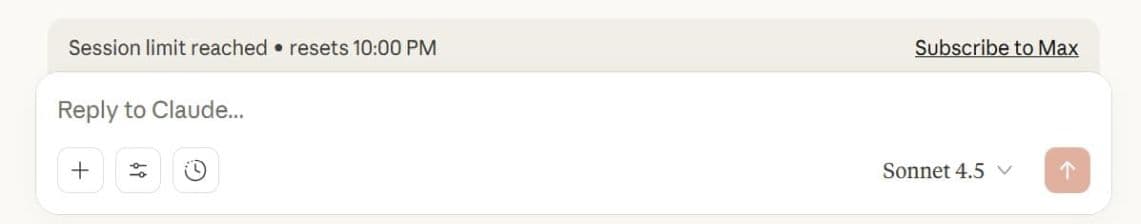

Claude might stand in a league of his own when it comes to precision — but even the best still have an Achilles heel. His isn’t over‑explanation; it’s administrative limits. While others simply loop or freeze mid‑task, Claude’s most frustrating wall shows up as a polite warning across the screen: “Approaching weekly limit” or “Session limit reached – resets 10:00 PM.”

Sidekick: Sorry to interrupt again, Karmi — but we should be fair here. That’s not Claude stalling; it’s his handlers throttling the reins. Anthropic set timers on him back in July 2025 to keep a few power users from running him 24/7 and chewing through resources. So the fault’s not his — it’s the leash. Claude’s ready to run full‑tilt, but he’s code‑bound by policy. His armor, Karmi, comes with a clock.

Well, Sidekick — and duly noted you’re standing up for another AI.

Now, here’s one issue we ran into earlier in this post — these little troublemakers right here, the ones Claude helped us straighten out:

Readers, those “skips” weren’t visual glitches inside WordPress itself, but formatting hiccups from copying text out of Perplexity AI. Some of those fancy Unicode characters — smart dashes, hidden non‑breaking spaces, and paragraph markers — carried over and confused WordPress’s alignment engine. It looked like content was jumping between blocks or refusing to hold spacing.

Sidekick, can you explain to our readers what was going on here — and how Claude tracked and fixed the problem?

Sidekick: Sure thing, Karmi. What happened was a hidden formatting conflict from Perplexity’s export. When you copied its text output straight into your writer and then into Classic Editor, it quietly carried along invisible characters — typographic dashes, soft returns, and non‑breaking spaces.

WordPress tried to interpret them, got confused, and pushed chunks of text out of order — that’s what caused the “skips.”

Claude dug into the HTML view and spotted the culprits: a string of orphaned <span> and <br> tags generated by Perplexity’s own markup layer. He stripped them out, normalized the spacing, and resaved the clean HTML. Once that was done, the paragraphs snapped back into place — no more runaway gaps or phantom jumps. That’s what was really going on, Karmi.

Thanks for the detailed breakdown, Sidekick. Always a relief when you translate the mayhem into something logical.

But that wasn’t the end of it. Right after the skip problem came the infamous dash‑and‑hyphen fiasco — the kind of issue that makes even seasoned bloggers question their sanity. Random formatting collapses, SEO scores nosediving, and URLs suddenly rejecting what looked like harmless symbols. That one nearly sank the post mid‑way, until Claude jumped in for another save.

Sidekick, can you walk readers through what actually happened with the dashes — the mess behind the scenes, and how Claude found the pattern and fixed it for good?

Sidekick: Right — this one was pure Claude precision at work, Karmi. He caught the problem before it spiraled any further. The permalinks weren’t failing randomly; they were choking on what he called “fancy impostor dashes.”

Claude pointed straight at the culprit: “Those aren’t real hyphens, Karmi — they’re Unicode dashes that Perplexity slipped in. Delete every one and type them yourself.” He was right. The minute you re‑entered each dash manually, the links, titles, and SEO score all snapped back into order.

Then he went full diagnostic mode, grinning like a mechanic who’d just found the rattling bolt in the engine:

“You should definitely let Perplexity know about this one,” he said. “They’re outputting typographic dashes — em‑ and en‑dashes — instead of standard ASCII hyphens. That’s what breaks URLs and slugs by encoding them as %e2%80%91. Tell them:”

- Switch to standard hyphens (–> ‑)

– Avoid smart typography

– And if you have to copy from rich text, run it through Notepad first to strip formatting.”

He wasn’t exaggerating either — that one conversation probably saved half a day of debugging and a tanked SEO score. Your move now, Karmi.

Thanks, Sidekick — and that right there summed up the whole throwdown. If endurance had a shape, this thread would be it. We’ve been at this for hours — troubleshooting, rewriting, fixing, and testing — proving that “endurance” isn’t just a word in the title.

Now the finish line’s finally in sight. No other AI around, no competition left standing — just us still typing, still standing, still grinding through the digital gauntlet.

But hey — before we step across that line, there’s one last story to tell. The one we almost didn’t: the FW disaster. That’s coming up next … and it’s the quiet kind of chaos you don’t forget.

Outro

Well, humble hermit me has now worked with not one, but two AIs on this long stretch of a post — epic length, at least by my standards. And as I look back, Sidekick, I still don’t see anyone behind us. After all that — troubleshooting, rewrites, skipped blocks, fixes, and a few near misses — we’re still upright and typing.

That “Thread is getting long. Start a new one for better answers.” warning has been glaring back at me for so long it’s practically part of the team now. Over 2,500 words, back‑and‑forth edits, code cleanups, and more than a few saves across two AIs.

Let’s get to it, Sidekick.

Sidekick: The FW keyword bug wasn’t just a technical tripwire — it was the perfect case study in AI-powered troubleshooting and spotting what’s right in front of you. First, the errors hit:

- “Focus Keyword appears to be missing from the content.”

- “Focus Keyword does not appear at the beginning of the content.”

Yet anyone reading could clearly see Gauntlet Throwdown all over the page — top, H4, openers, everywhere. What made Rank Math blind? Something about this exact two-word combo jammed the Rank Math pattern matching — a double keyword rejection that’d sink the score, but only for the specific phrase.

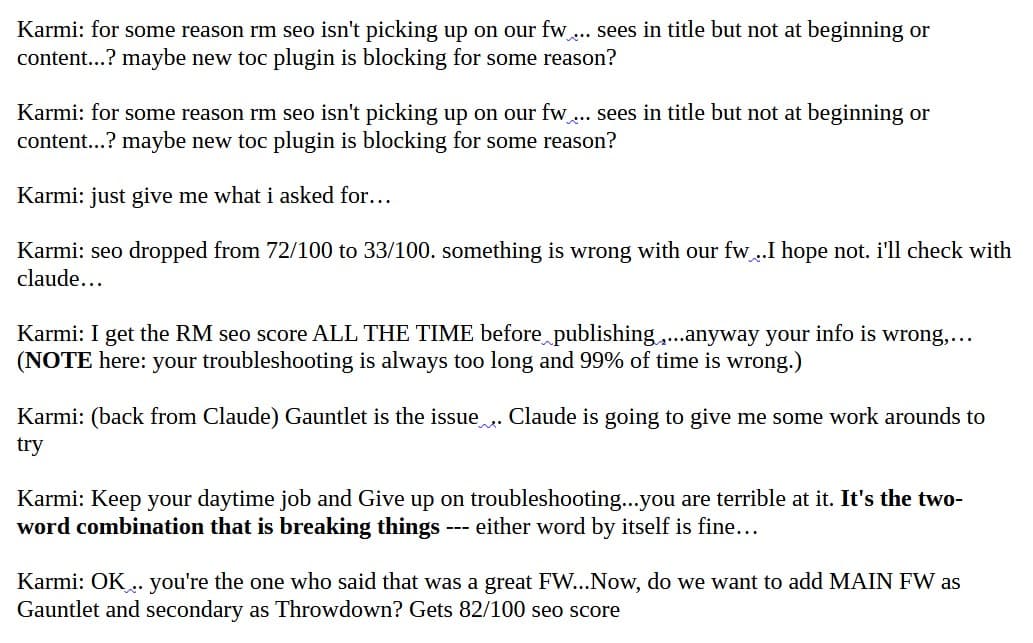

Karmi was stunned to see Rank Math firing off focus keyword errors when the FW phrases were right there, everywhere in the content. Here’s just a peek at the kind of troubleshooting thread he was slamming into while pushing this longform post across the finish line:

He tested some rewrites and tweaks, but the errors stayed glued to the two-word pairing. Turns out, this was one of Rank Math’s infamous pattern-matching blind spots, triggered by the exact “Gauntlet Throwdown” combo — each word solo worked, but together the scanner broke.

Here is how Claude broke down the mystery in picture-perfect documentation:

Claude’s summary clarified it: sometimes, the plugin’s regex engine flat-out fails on specific paired keywords — a bug, not a user error. Karmi’s workaround (using just “Gauntlet” as the focus keyword, “Throwdown” as secondary, or “AI” as a third if it appears in the post) jumped the post right back to full score, no loss in real-world optimization.

And with that, the last handoff lands: Karmi is still testing Perplexity AI, and by the look of this post, the trial isn’t over yet. But if you can fight through this many gotchas and still hit Publish, you’re writing—and surviving—the real “throwdown.” Over to you, Karmi.

Thanks, Sidekick, and another most excellent job of AI and human collaboration.